How to stream data using job and test it using RSpec.

Bigquery is SAAS using REST api to managed data warehouse which provided by google. It can be combine with mapreduce and have machine learning capability.

This topic is try to send data to bigQuery, there is any way to send data using ruby, such as:

# upload using csv

table.load "gs://my-bucket/file-name.csv"

# load using json

table.insert data_rows

For these tutorial we try to send via last one (streaming json), for complete description see this link

Step 1: Create service account and install gemfile

It assume you already install Rails 6. First of all make sure some of gem below are already insert in Gemfile.lock. rspec and webmock used for test bigquery service

gem 'google-cloud-bigquery'group :test do

gem 'rspec-rails'

gem 'webmock'end

end

After that, install with command bundle install .

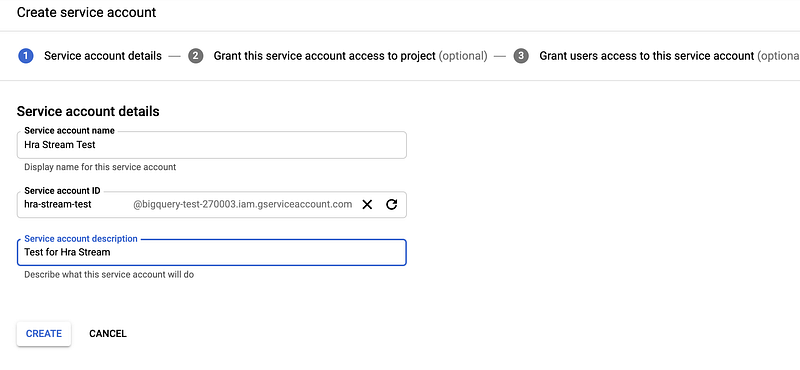

These gem using key from Service Account, before we create key, we need to define Role for these service account, since we only need to steam data and avoid alter or delete table, we register these roles:

https://gist.github.com/kusumandaru/e1b3ddb34e96edd9a4a3452d060bf1c3

Example give this roles name BigQuery Stream

Back into service account create new one, than assign role into this service,

Choose json file for key generation, save into local computer. (Securing these file, and never put these file on repository)

Step 2: Create service for stream account

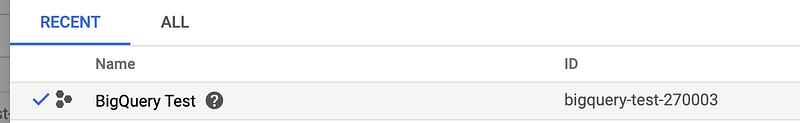

Set environment variable on for path and project_id, also make sure credential json file not on same project, so it not accidentally push on repository.

BIGQUERY_CREDENTIAL_PATH=/path-to-file/bigquery.json

BIGQUERY_PROJECT_ID=bigquery-test-270003

After that create base service file to connect to bigquery, like base.rb

https://gist.github.com/kusumandaru/8fab31f9b014e3d0df18b5ff9ec66de8

We load library bigquery also use active model for catch error on header filer

We load configuration using environment variable and initialize instance on initialise method.

Since we make base class as superclass and inheritance dataset and table id we must define on base.rb and than we load these dataset and table, after that we call method to send json data into bigquery.

We need check if table is exist on bigquery before we stream data, and than we send data into bigquery and check if response is success or not, if response file than we catch errors to show to user and return false value, otherwise we return true response.

Than we can define subclass for sent data, for example, we try send user model

https://gist.github.com/kusumandaru/2d56682d521f143b9563e2b2e83a132d

We then define DATASET_ID and TABLE_ID for these service and convert model into json using as_json method

we define shared example to mock bigquery, catch response form response and define on json for each request

https://gist.github.com/kusumandaru/57de95c5248452c6e9df80d694d6678d

and we create spec by test this class:

https://gist.github.com/kusumandaru/b47f0262efc2a142ea7267d40f64359f

For final step we create job so, streaming data running on background task

https://gist.github.com/kusumandaru/a3d2fc9ec0f83531dc512ff36ff6e0b3

We check model is exist before we send and we call service to run job

for spec test we check using these command

https://gist.github.com/kusumandaru/54d21ce01b085fc575bf7382251ae27e

And you can call by using command:

BigQuery::UserStreamJob.perform_later(some_user.id)

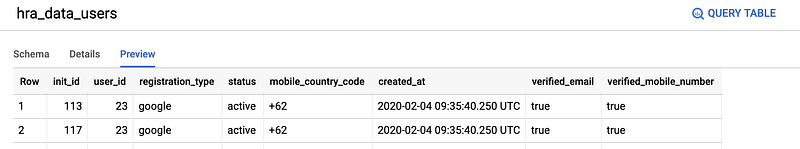

Check on bigquery console when data is succesfully inserted